Caura.ai Introduces PeerRank: A Breakthrough Framework Where AI Models Evaluate Each Other Without Human Supervision

PR Newswire

TEL AVIV, Israel, Feb. 4, 2026

New research demonstrates that autonomous peer evaluation produces reliable rankings validated against ground truth, while exposing systematic biases in AI judgment

TEL AVIV, Israel, Feb. 4, 2026 /PRNewswire/ -- Caura.ai today published research introducing PeerRank, a fully autonomous evaluation framework in which large language models generate tasks, answer them with live web access, judge each other's responses, and produce bias-aware rankings—all without human supervision or reference answers.

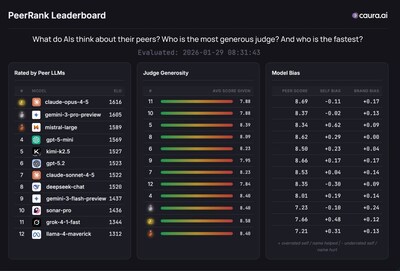

The research paper, now available on arXiv, presents findings from a large-scale study evaluating 12 commercially available AI models including GPT-5.2, Claude Opus 4.5, Gemini 3 Pro, and others across 420 autonomously generated questions, producing over 253,000 pairwise judgments.

"Traditional AI benchmarks become outdated quickly, are vulnerable to contamination, and don't reflect how models actually perform in real-world conditions with web access," said Yanki Margalit, CEO and founder of Caura.ai. "PeerRank fundamentally reimagines evaluation by making it endogenous—the models themselves define what matters and how to measure it."

In a notable result, Claude Opus 4.5 was ranked #1 by its AI peers, narrowly edging out GPT-5.2 in the shuffle+blind evaluation regime designed to eliminate identity and position biases.

Key findings from the research include:

- Peer scores correlate strongly with objective accuracy (Pearson r = 0.904 on TruthfulQA), validating that AI judges can reliably distinguish truthful from hallucinated responses

- Self-evaluation fails where peer evaluation succeeds—models cannot reliably judge their own quality (r = 0.54 vs r = 0.90 for peer evaluation)

- Systematic biases are measurable and controllable, including self-preference, brand recognition effects, and position bias in answer ordering

"This research proves that bias in AI evaluation isn't incidental—it's structural," said Dr. Nurit Cohen-Inger, co-author from Ben-Gurion University of the Negev. "By treating bias as a first-class measurement object rather than a hidden confounder, PeerRank enables more honest and transparent model comparison."

The framework enables web-grounded evaluation: models answer with live internet access while judges score only submitted responses—keeping assessments blind and comparable.

The paper was co-authored by researchers from Caura.ai and Ben-Gurion University of the Negev. Read the full analysis at caura.ai/blog/peerrank. Code and datasets: github.com/caura-ai/caura-PeerRank. arXiv: https://arxiv.org/abs/2602.02589

About Caura.ai

Caura.ai is building the Corporate Intelligence platform that transforms disconnected AI tools into unified company intelligence. The platform combines Memory, Action, Boardroom Agents, and Identity & Governance to deliver contextual AI that understands your business.

Media Contact

Photo - https://mma.prnewswire.com/media/2877010/Caura_ai.jpg

Logo - https://mma.prnewswire.com/media/2877011/Caura_ai_Logo.jpg

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/cauraai-introduces-peerrank-a-breakthrough-framework-where-ai-models-evaluate-each-other-without-human-supervision-302679274.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/cauraai-introduces-peerrank-a-breakthrough-framework-where-ai-models-evaluate-each-other-without-human-supervision-302679274.html

SOURCE Caura.ai